Datalore is a collaborative data science platform. The notebook experience has been taken to the next level.

This is a technical review about Datalore.

Datalore technical overview

Datalore separates from similar platforms by its collaboration abilities, developer experience and ease of use.

Datalore is surprisingly flexible platform for many needs such as:

- Reporting

- Workflow automation

- Generic programming

- One-off data analysis

- Forecasts

Essentially Datalore takes off the burden of managing the computation environment. It has some integrated tools for the mentioned use cases, but most of the logic is implemented by plain Python or other programming language.

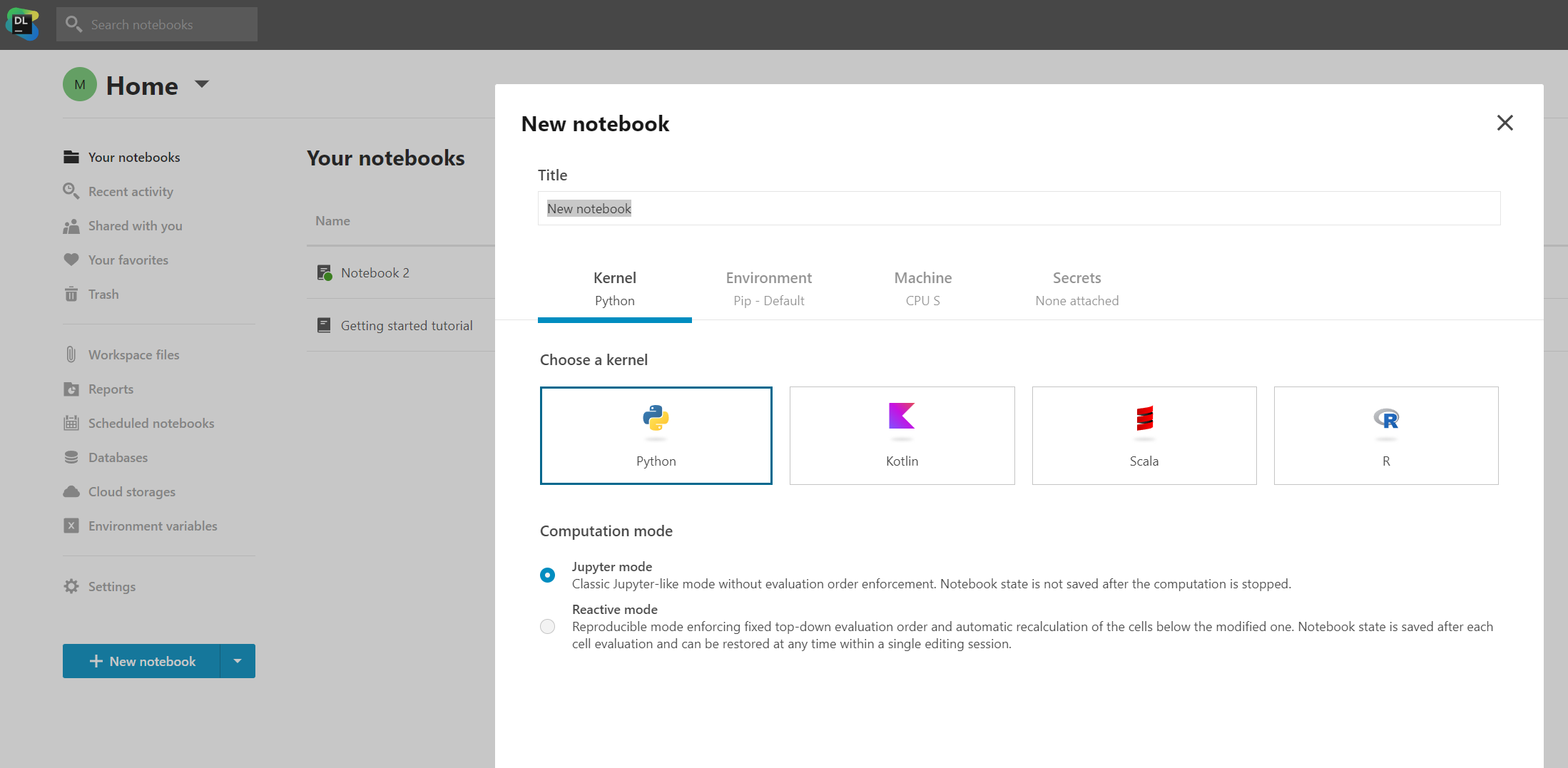

Programming languages supported by Datalore

Python obviously.

R and Scala have been added recently.

Datalore is the only data science platform that supports Kotlin ! Maybe because Kotlin was originally developed by JetBrains…

Datalore notebook is an isolated environment

Being an online environment, Datalore is always available. No more fighting with Python virtual environments, Docker configuration and version dependencies on your laptop.

In Datalore, each notebook is its own environment. This would correspond a pattern where you create a isolated Docker container for each analysis project on your laptop.

Actually, notebook is a bit misleading concept in Datalore. A Datalore notebook groups together a collection of Jupyter notebooks (what you typically call as notebook) in multiple tabs. It is like having multiple sheets in an Excel workbook.

You can manage libraries and file mounts per Datalore notebook. However, for example environment variables are shared among the notebooks.

The environment is Jupyter compatible. This means, you can both import and export as .ipynb files.

Datalore workspaces

You can further organize Datalore with workspaces that contain one or more Datalore notebooks.

Workspaces can be created eg per project or group of users. Notebook access management and overall structuring should become easier.

File storage and mounting in Datalore

Datalore has a concept of Notebook files and Workspace files.

The first one is convenient place to store analysis specific data files.

At some point you probably want to create your own .py file for your code utilities. Surprisingly many data science environments struggle with this simple task, but not Datalore! Importing your custom .py modules is easy from the notebook files.

Workspace files are accessible with the collaborators within the workspace.

Fetching data from cloud storage services is smart for larger files. Or if the source files must be kept in the original location. At the moment Datalore supports file storage mounting from Amazon S3 and Google Cloud Storage. Also SMB and CIFS protocols are supported

Reports and inputs in Datalore

My absolute favorite feature is the ability to create and share reports from notebooks. In the report builder you simply choose the cell you want to include. It is possible to show both the code and the output.

The report can be made public or shared only within your team. If the report is defined as interactive, the viewers can control the data at some extent. In the static mode only commenting is allowed.

Combined with interactive inputs, this stuff becomes pretty cool!

The report builder view might change how we think about business intelligence. It gives data scientist fast track to showcase the results for their audiences.

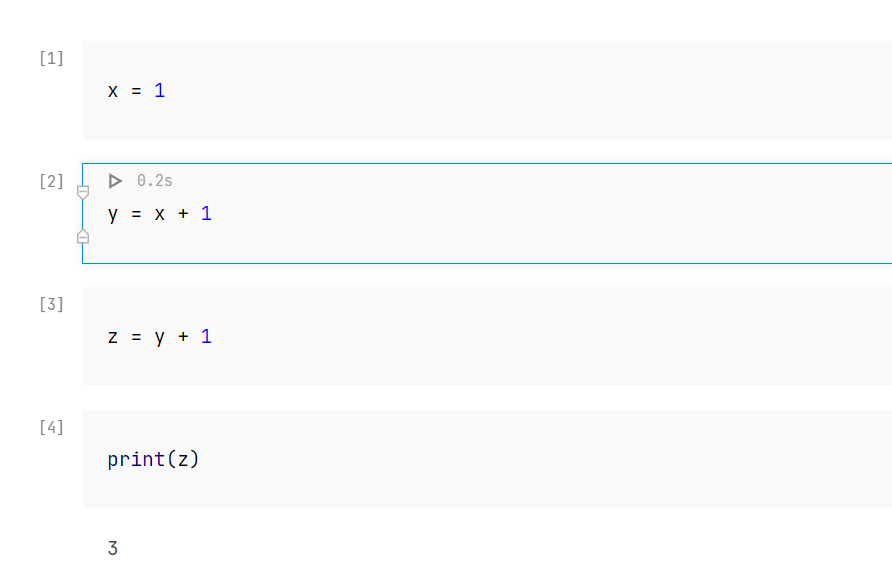

Reactive mode

Notebook can be defined as “reactive”. This means that it runs all the cells at once. Even if I clicked the second cell, Datalore still ran the whole notebook.

Here are some cases where Datalore interactive mode is beneficial:

- Let user update Datalore interactive reports

- Recalculate notebook when an input is changed

- Keep your analysis consistent

Code editor and developer experience in Datalore

Datalore is far ahead of other online data science platforms with its developer experience. All code is executed from notebook view.

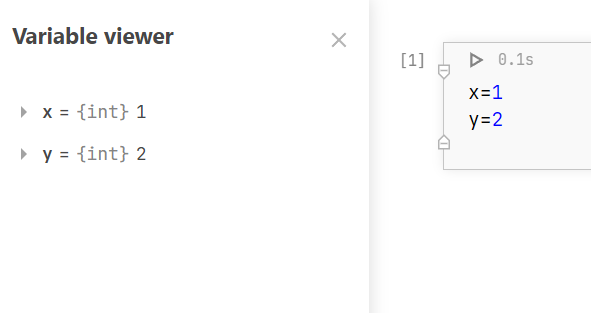

Variable viewer

Variable viewer is like the developer’s table of contents for the workflow. Even though, you also have actual table of contents from the markdown headers…

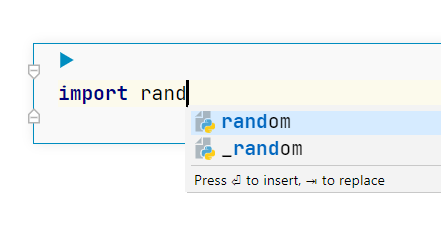

Code completion

Datalore can automatically suggest library and variable names once you start writing. You would think this is typical among the data science platforms, but it definitely is not!

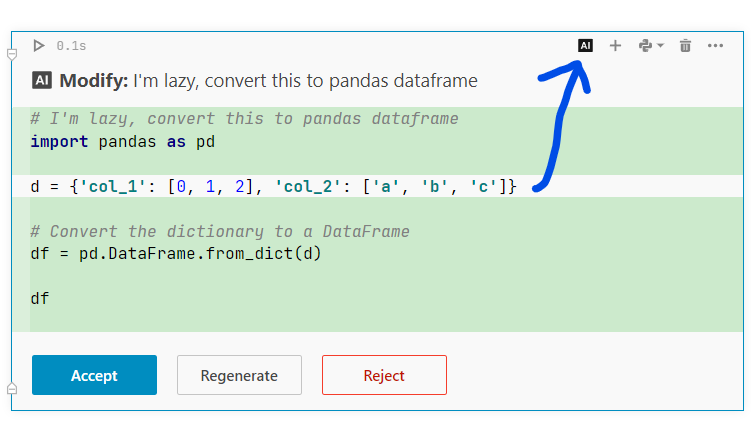

AI assistant

Regardless of working as an AI Engineer, I have had my doubts about AI hype. Datalore had an AI assistant baked in the product, so this was actually first time I tried such utility for programming.

The results were impressive. It works pretty well in most cases and saves a few Google searches. For optimized results it is recommended to use your own brains and experience.

Other UI goodies

- View notebooks side by side in Split view

- Hide the top menu with distraction free mode.

- Optimize library imports

Database integration

You can centrally manage the database connections to access data as easy as possible.

Datalore has awesome database integration feature. It enables running SQL queries easily to speed up time to insights. Databases such as Google BigQuery, PostgreSQL are included among dozens of others.

Workflow automation

The notebooks can be scheduled to run on specific time.

Combine this to transactional email API such as EmailLabs and you have robust workflow automation!

As an example, data analysis results could be calculated each night and delivered to email automatically.

Datalore starts fast!

Compute instances start and restart almost instantly. This is a big deal for developer experience.

Too many library installations might make the startup slow. Fortunately, the most common packages are included to the default environment.

Parallel computation for large datasets

Datalore does not support parallel computation frameworks such as Dask or Spark out of the box.

However, Datalore has integration to Google BigQuery which is one way of preparing larger datasets. You can directly run SQL in the notebooks, so heavy lifting is relatively easy to do on database side.

Notebooks are sufficient interface to build simple data pipelines for data science experimentation phase.

Building machine learning models in Datalore

Datalore has everything it takes to develop machine learning models such as linear regression, random forest or even neural networks.

For example scikit-learn and

Keras are pre-installed in the default environments.

Python ecosystem has plenty of other libraries for automating the machine learning development process.

However, at its heart Datalore is a generic analytics platform for experimentation. For production-grade machine learning there might be better products.

Summary of Datalore tech review

Datalore is a generic data science platform. It is still flexible enough to handle also reporting and workflow automation.

Datalore provides essential features to work with notebooks and collaborate with other team members. The developer experience is unprecedented.

Write a new comment

The name will be visible. Email will not be published. More about privacy.