Kubernetes has been everywhere lately. Especially in the context of MLOps to manage the plethora of different tasks such as training, serving and registering the models.

I knew that Kubernetes is an open source tool from Google to manage a fleet of applications. I have understood that it has pretty much overruled other technologies like Docker Swarm in the competition. Kubernetes can be installed on your own server or ran as a managed cloud service

As it seems almost inevitable that I will work with Kubernetes sooner or later, I wanted to test in practice.

Background of the Kubernetes blog post

This blog post is written on my work time at Silo AI as a part of an internal innovation project. The aim was to explore possibilities to publish expert blog posts on the company website. After a discussion with the marketing team we decided to publish this sample post on my personal blog.

Flask Kubernetes code repository

The the code and instructions can be found from the flask-kubernetes GitHub repository .

Understanding how Kubernetes works on high level

I started by Googling: how Kubernetes works.

It seems that Kubernetes is meant specifically for containers and not just any kind of applications. Kubernetes takes care of many aspects of the environment such as resource provisioning and failure management.

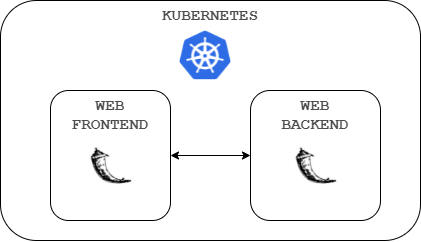

Maybe I could try installing Kubernetes on my PC. I could create a super simple local web application having two containers: backend and frontend. This would be a good demonstration how multiple apps interact with each other.

Installing Kubernetes

Next search phrase: download Kubernetes.

I wanted to install it on the Linux instance running on my Windows machine ( WSL ).

The Kubernetes download page had a huge list of components to install. Which ones do I need?

More googling. Seems that the kubectl is the maintool. I learned that for example kubectl convert is a tool for migrations between different versions. No need for that at this point.

Somehow I found out that there is a version for local Kubernetes development: Minikube. It is meant for a single node computation cluster. Kubernetes would be capable of utilizing multiple nodes.

Minikube tutorial

The

getting started guide of Minikube

is really great and there is no need to go through it here. I was able to get most things done without additional effort. I ran minikube start two times and restarted my WSL at some point, but otherwise the process was surprisingly smooth.

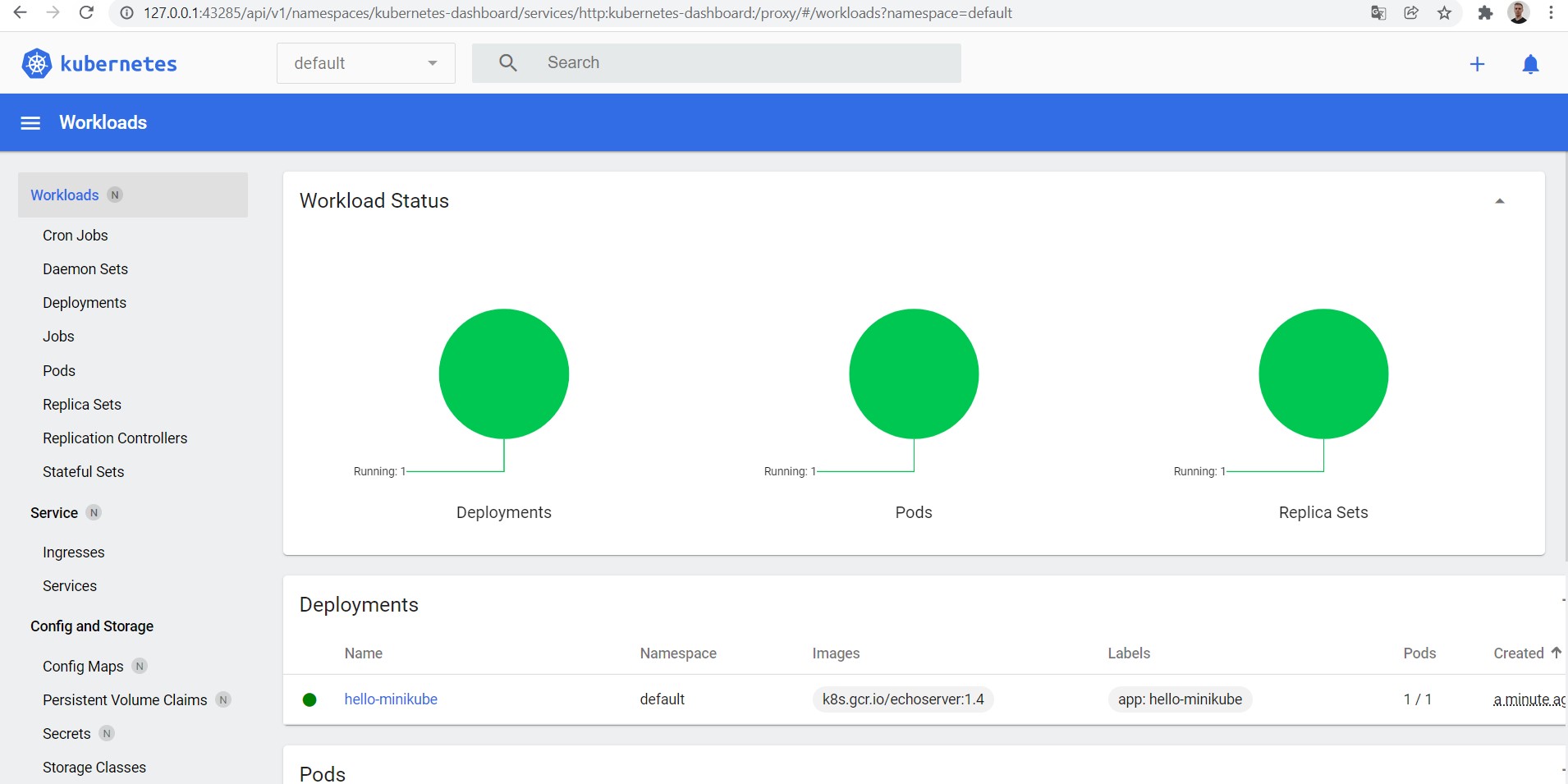

After deploying the tutorial’s sample pod I saw this by running minikube dashboard:

It is not uncommon to spend hours on Stackoverflow and running different commands literally tens of times before things start to work. You do not know whether you have the wrong command, are you missing a hyphen somewhere or are you even doing the right thing. Sometimes it is simply trial and effort.

During the installation process I learned that Kubernetes supports various container technologies. Personally I have experience only from Docker.

The concept of how Kubernetes works is kind of confusing in the beginning: You install Docker (or other container engine) to run Kubernetes to run other containers. It is like purchasing a forklift to lift other forklifts in a forklift factory…

Basic case: Create a Python Flask app in Kubernetes

The Minikube tutorial did not help me to understand how to run my own application in Kubernetes. This is where the time consuming part started.

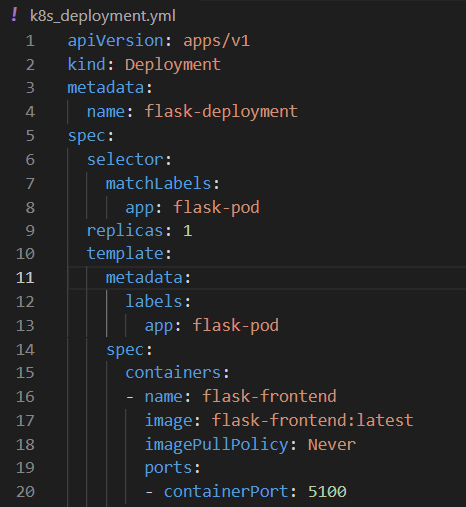

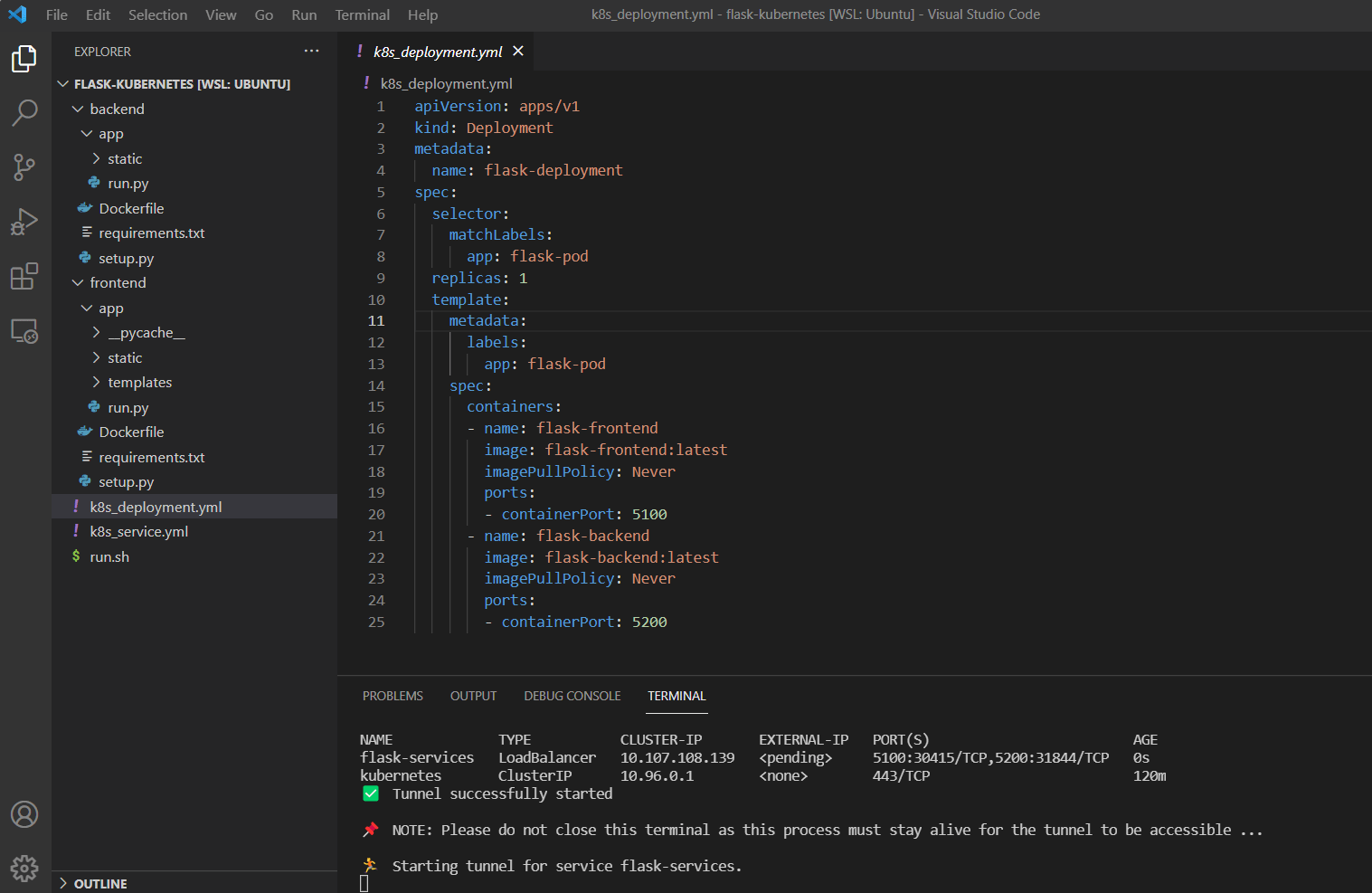

Reading lots of blog posts, discussion forums and so on. The most delays happened when trying to load a Docker container from my laptop in the Minikube instance (which ran in the same laptop). The solution was to change imagePullPolicy to Never, so Kubernetes will not try to load the image from an external registry.

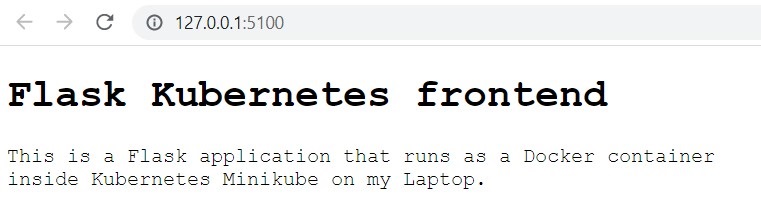

After many iterations I was able to deploy my web app frontend as a pod in the local Kubernetes. My choice for the web app framework was Python Flask as it is simple and familiar for me.

I like the Kubernetes declarative approach: You request what you want in the deployment specification and Kubernetes takes care of how to make that happen.

Here is an example of the specification file:

The application logic is in the container and the Kubernetes just takes care of how to run it. The result:

I was confused with the concepts of pod and deployment for a while. Especially when I tried to delete a pod weird things happened. If I deleted a pod, it created it again and it was really annoying.

Took me a while to understand the difference between pods and deployments. Pods are created automatically according to the deployment specification. So once the deployment is deleted, it also wipes off the related pods.

I also understood why weird things happened when I tried to delete the pod: Kubernetes tried to launch a new pod to replace the deleted one. Exactly how it was declared in the deployment specification. Smart!

Advanced case: Make two Python Flask apps talk to each other

The goal was to make Kuberentes run two containers and make them communicate with each other. In the end, component interaction is one of the most important use cases for Kubernetes on top of the resource management.

I tried to open the Kubernetes dashboard by running minikube dashboard. It Seems that I needed to run minikube start first. Then it worked.

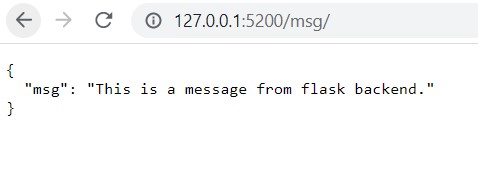

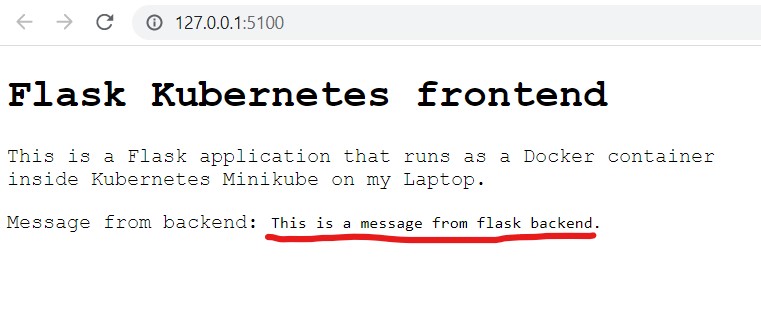

I built another container called flask-backend. It returned simple json from just one endpoint:

The the frontend was modified to read the backend’s message:

The final code repository looked like this. The whole system could be re-created by a single command in seconds considering Minikube is already installed.

Kubernetes vs Docker compose

Kubernetes deployment specification looks very much like Docker compose files. What is the difference?

My understanding is that docker compose is simply a tool to build and run multiple Docker containers at once. But it does not take responsibility for managing the infrastructure by any means.

According to the documentation Docker compose can be ran in production , but only a single server setup is recommended.

Let’s simplify things a lot to make the distinction for beginners: A software developer team creates the container images with Docker compose and the DevOps team operate those with Kubernetes.

What does it mean that Kubernetes is declarative?

I have heard the term declarative in the context of SQL queries.

It means that the developer describes what they want. Then it is up to the declarative system to decide how it performs the requested end result. Like SQL database engines decide how they return the data for the given query, Kubernetes decides how it keeps the specified deployment up to date.

Kubernetes use cases

Kubernetes can be installed on both cloud and on-premises.

In the on-premises environment it might give significant benefit by standardizing many aspects of the deployments. Migration to another environment with Kubernetes should be quite doable.

Major cloud providers sell managed Kubernetes clusters as a service. Definitely good choice for many use cases. But in the cloud environment I would think there are more options. For MLOps tasks infrastructure there are solutions like Databricks providing a packaged product.

Summary of the Kubernetes test

I was able to make the Flask web backend and frontend to talk with each other inside the Kubernetes in two days without ever using Kubernetes before.

In my opinion the official tutorials are most often the quickest way to start learning a new tool. For the advanced use cases I would definitely interview our other Kubernetes experts from Silo AI.

Write a new comment

The name will be visible. Email will not be published. More about privacy.