This blog post compares machine learning platforms from major cloud providers Azure, AWS and Google Cloud. Also Databricks platform has been included.

IBM and Alibaba clouds were left out due to lesser popularity among the developers.

There will be another post coming about SaaS type of machine learning (ML) platforms.

Important questions are: What features each of the platforms have, what is the basis of costs and what are the use cases?

The blog is not sponsored.

Ranking of ML platforms in major clouds

As a data scientist and a machine learning engineer, this is my favorite ranking:

| # | ML Platform | Comments |

|---|---|---|

| 1 | Google Vertex AI | Laser sharp focus in ML process. Easy, clean and containerized mindset. |

| 2 | Databricks | Most comprehensive for ambitious teams in ML and data engineering. Integrated Spark. |

| 3 | AWS SageMaker | Developer mindset, connects independent services. |

| 4 | Azure Machine Learning | Closely bound to Microsoft products. |

My experience about ML platforms

I got certified as Google Cloud Professional Machine Learning Engineer on early 2023. Significant part of the training was about the unified ML platform Vertex AI.

Additionally I have 3 years of data science and machine learning engineering experience from Databricks. IBM Watson Studio became familiar while leading ML platform migration activities.

Even though IBM Watson Studio were excluded from this review, it does the job well even for production workloads. IBM cloud users should take a look at it.

AWS and Azure clouds are familiar to me and I have ran some testing with their ML platforms. I even did a deep dive to Azure’s ML product to compare it against Databricks.

Machine learning platforms from the major cloud providers

All major cloud vendors have their own services to manage the machine learning lifecycle:

| Company | Service |

|---|---|

| AWS | SageMaker |

| Vertex AI | |

| Microsoft | Azure Machine Learning |

| Databricks | Databricks |

Databricks is slightly different in a sense that under the hood it utilizes cloud computing resources from Azure, AWS, Google Cloud or Alibaba Cloud.

The easiest access to the platforms is through the web browser portals provided by the cloud vendors. They also have command line interface.

Summary of features on different cloud ML platforms

Most of the definitions are explained further in the previous article: What is a machine learning platform?.

| Feature | SageMaker | Azure ML | Vertex AI | Databricks | Explanation |

|---|---|---|---|---|---|

| Python | Yes | Yes | Yes | Yes | Python programming language support. |

| R | RStudio license required | Yes | Yes | Yes | R programming language support. |

| SQL and metadata | AWS Athena | Designer | Metadata only No SQL | Hive metastore | Read any data source using SQL syntax. |

| Spark and Scala | Requires AWS EMR | Requires Azure Synapse | Requires Dataproc | Integrated | Run distributed computing with Spark which is written in Scala. |

| Model registry | Yes | Yes | Yes | MlFlow | Ability to save, load, list, tag and version multiple models. |

| Experiments | Yes | Yes | Tensorboard | MlFlow | Store metrics and details of ML model training. |

| Feature store | Yes | No | Yes | Yes | Save pre calculated tabular data to be used by other team members. |

| Scheduling | Yes | Takes effort | Yes, single notebooks Pipelines require Cloud scheduler | Yes | Run notebooks, jobs or pipelines on regular intervals. |

| Orchestration or pipelines | Yes | Designer | Yes | Job orchestration | Combine single data processing, training or prediction tasks to a chain of events. |

| Publish endpoint | Internal only | Yes | Yes | Yes | Get prediction results through an API. |

| Notebook co-working | Yes | Code only,no shared compute | Yes | Yes | Enable team members to work with same code and computing resources. |

| AutoML | Yes | Yes | Yes | Yes | Try to automatically find a model that gives the best results. |

Summary of cloud ML platform features.

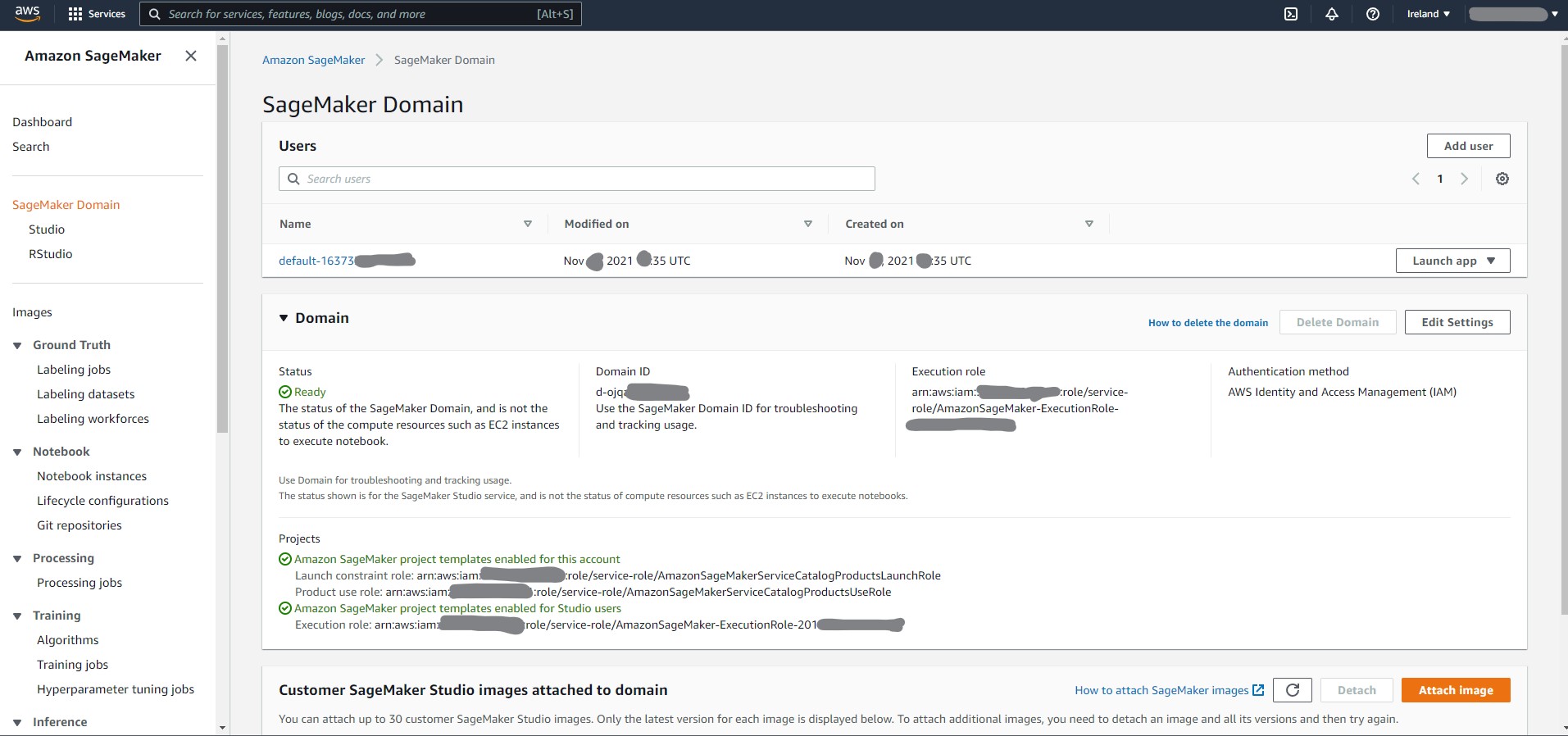

AWS SageMaker

The whole AWS cloud design mindset could be described as “developer first”. The downside is that the user interface to manage SageMaker feels too technical.

SageMaker was published on 2017.

Pros

As always with AWS, the SageMaker documentation is great.

It is possible to run Spark by launching an EMR cluster and connecting to that in a SageMaker Studio notebook. For data transformation you can launch a Processing job based on a container image such as Docker.

On a conceptual level it seems logical that experimental work in SageMaker Studio and deployment through containers have been separated.

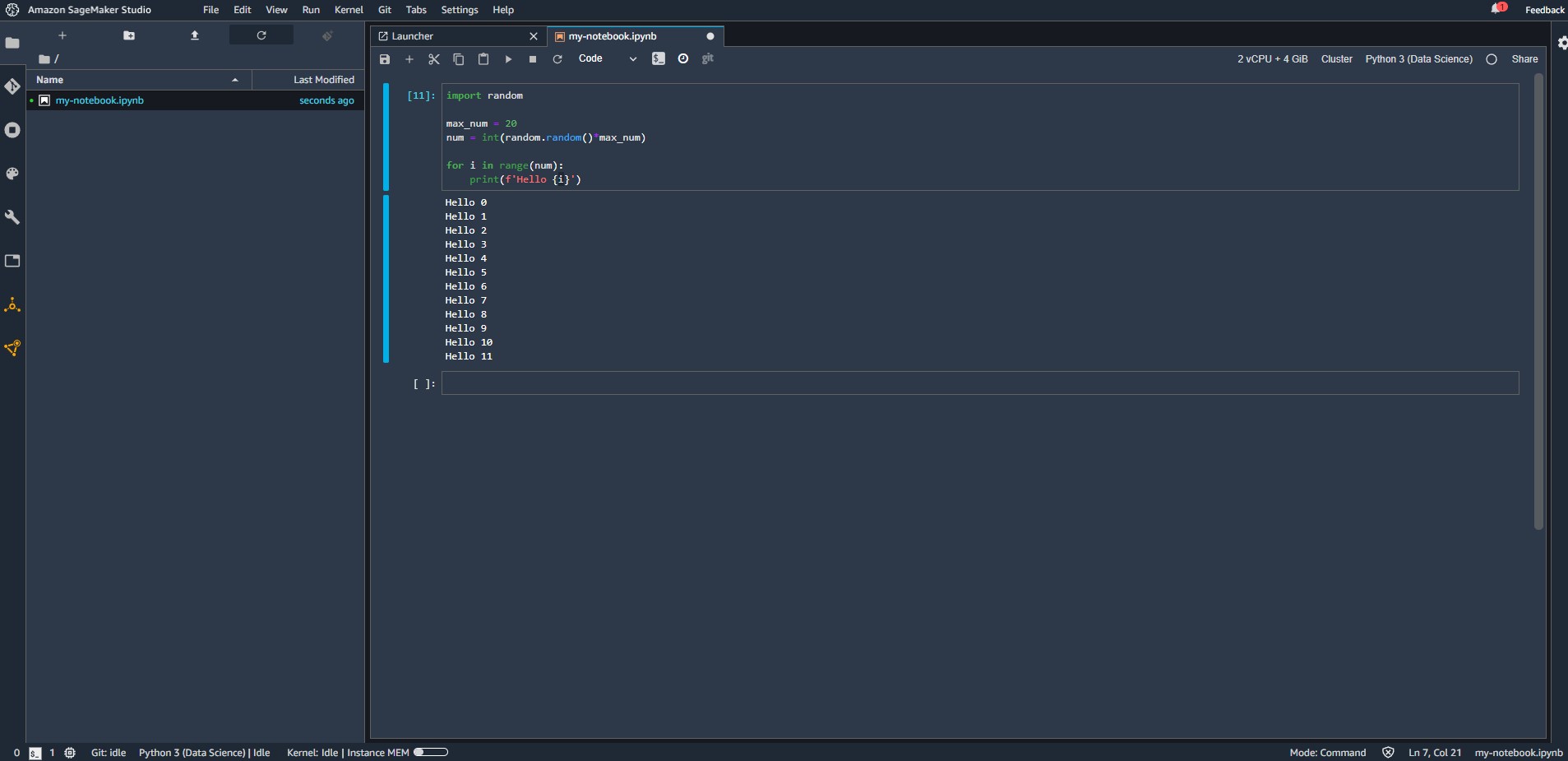

The Studio enables multiple team members to access the same code. It runs on top of Jupyter Lab.

For teams using R language SageMaker provides a full blown RStudio environment .

SageMaker has the Edge Manager to deploy ML models on physical devices. This seems like an interesting option for those who need it.

Cons

The SageMaker Studio user experience is not the most user friendly. It is somewhat difficult to find what link needs to be clicked or how to proceed with the setup. AWS keeps services as independent as possible. This makes solutions modular but sometimes complex to understand.

R (RStudio) and Python (Studio) development have been clearly isolated from each other. The RStudio requires a paid license . This takes R usage closer to MatLab where only specialized organizations will choose it over Python because of license fees.

It appears that notebooks can not be scheduled directly from Studio which is dedicated for experimental work. Instead, Processing and Training jobs need to be containerized and deployed in a separate process.

It has not been made clear why the older Notebook instances still exist aside of newer SageMaker Studio. My assumption is that Notebook instances will be deprecated at some point.

Target users

AWS cloud users. Teams looking for clear distinction between exploration and production workloads. Teams having solid cloud infrastructure skills. RStudio users.

Pricing

Price per computation hour. Other AWS resources such as S3 and ECR . Feature store has separate cost. Read more about SageMaker pricing.

Microsoft Azure Machine Learning

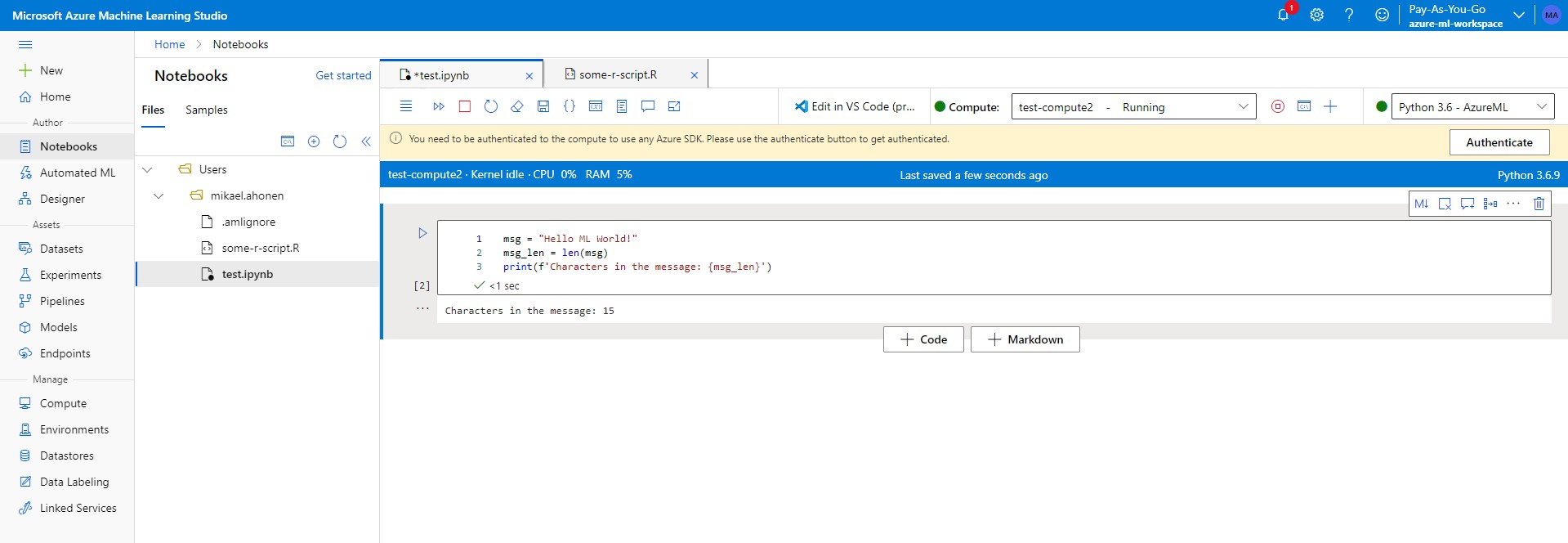

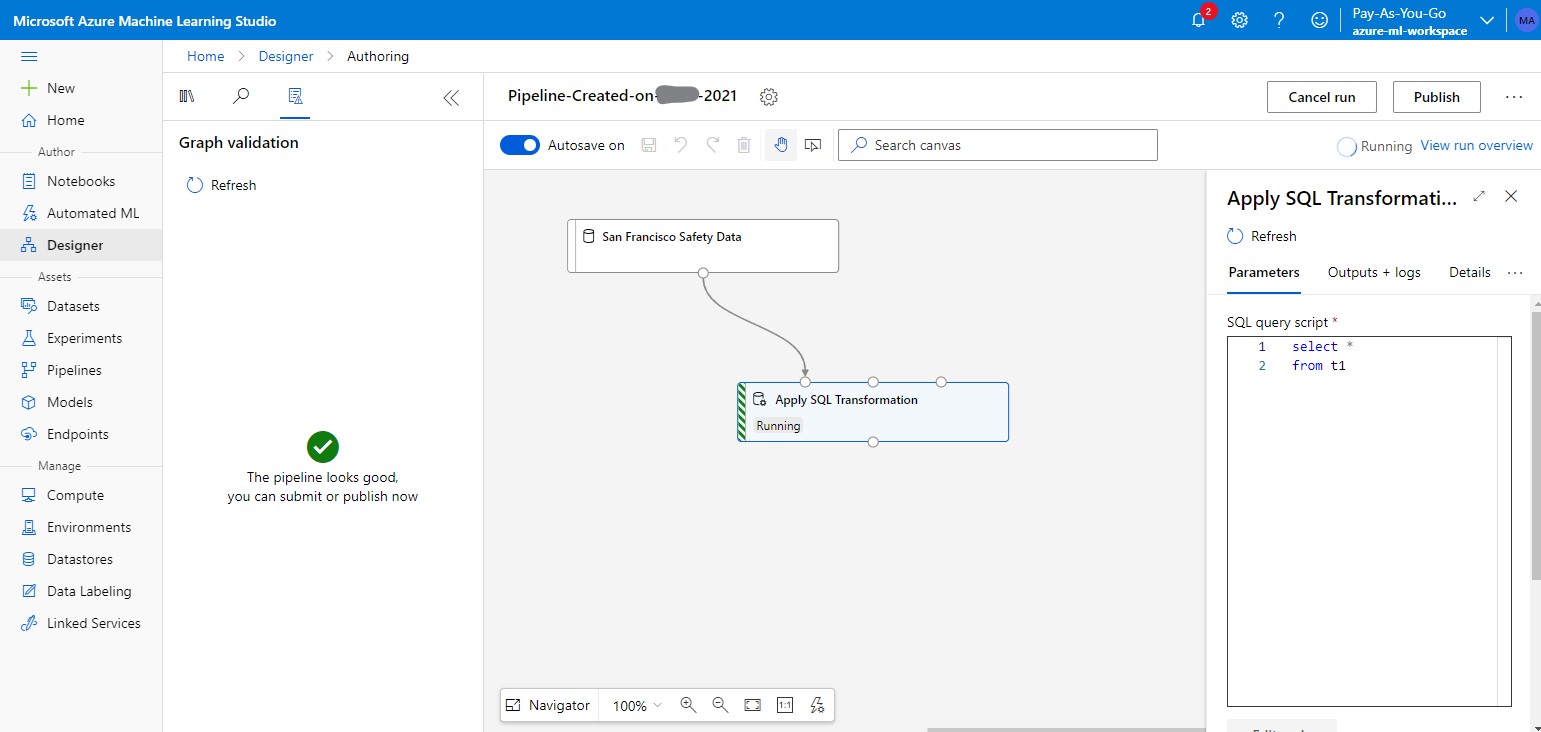

Microsoft Azure Learning feels like it is not aiming over the top.

The notebooks are integrated to Azure web portal UI.

Azure Machine Learning has its roots in year 2015 but current platform has existed since 2018.

Pros

Azure Portal UI is clean and intuitive. It was easy to get started. The system feels like one compact package.

Workflow designer makes life easier for less experienced coders and possibly makes model building faster.

When compared to alternative platforms, the platform and documentation is focused on use cases rather than technical details.

Cons

Two data scientists can access the same notebook and code. But it is surprising, that Azure Machine Learning does not allow many users to share the same computation resource.

Microsoft have tendency to integrate their services deeply to other products of the company. Azure Machine Learning is not an exception. The workflow designer, Spark jobs through Synapse database engine and Microsoft specific libraries create deep relationship to other Microsoft offering.

Finding answers to some technical questions from documentation was surprisingly difficult.

Scheduling to run notebooks, experiments or pipelines has not been made easy.

Target users

Individual data scientists. Microsoft customers. Cost aware organizations. Teams that look for high level tool to build models quickly.

Pricing

No service cost, only the deployed Azure components. In practice the computation time is the most significant part. More about pricing .

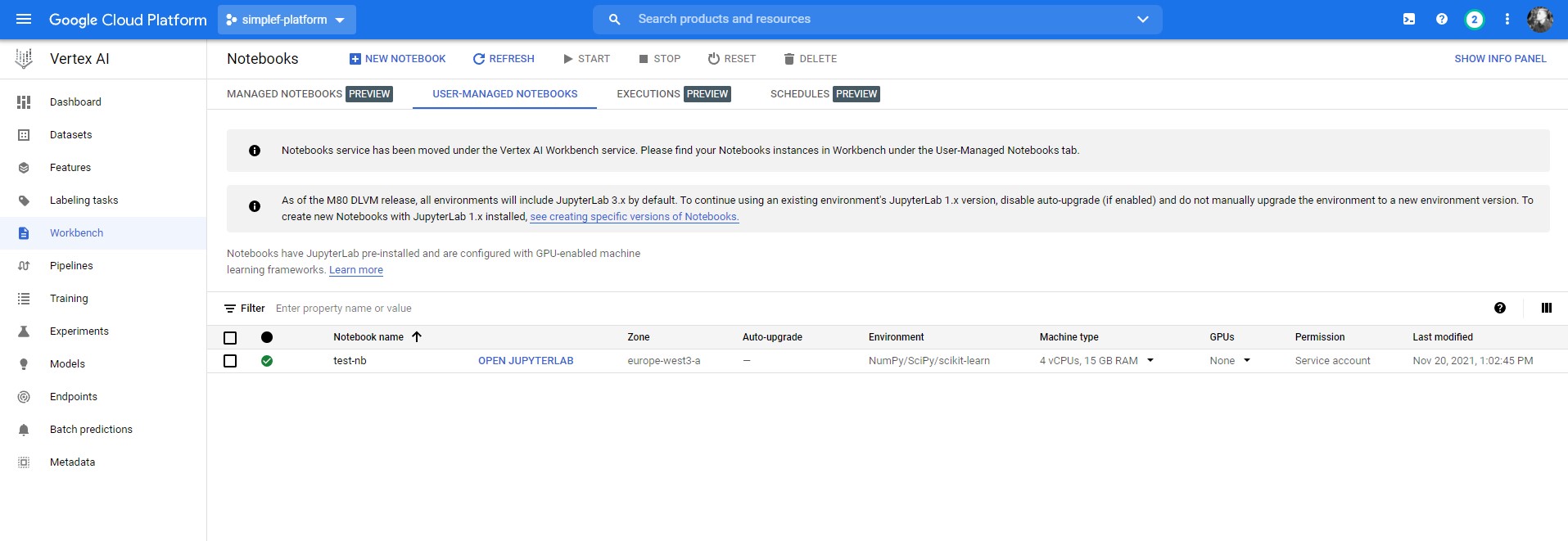

Google Cloud Vertex AI

Google has exciting set of features in their ML offering.

The backend and all best practices are tightly connected to Tensorflow. The pipelines can also natively run Kubeflow Pipelines.

Tensorflow-first attitude does leave other empty handed. Containers allow ML engineers to run PyTorch solutions among any other preferred framework.

When going to the Google Cloud console, the product looks similar to what competitors have. The user interface is the cleanest by far.

Read here the full Vertex AI review.

Pros

During the notebook creation Vertex AI makes it clear that all computing instances have GPU capability. Google developed Tensor Processing Units are also available.

The whole workflow is thought through and structured in a systematic way. Google is a step ahead especially with the adoption of container technology.

Explainable AI functionality could be worth further exploration.

The Workbench is based on open source Jupyter Lab, so the UI might be familiar for many.

Spark jobs can be ran in another Google Cloud service Dataproc . Google suggests Dataproc only for legacy Spark jobs as Tensorflow ecosystem has intelligent approaches to train ML models from raw data in parallel.

Cons

Vertex AI bundles together so many services that it is almost overwhelming. Sometimes it is unclear what part of the offering should be used and how they link to each other.

Vertex AI was published on May 2021. The new stack can still struggle growth pains.

Target users

Google Cloud users. Teams using Tensorflow or Kubeflow. Teams that have not yet chosen their cloud. Those who seek new innovative ideas for their machine learning workflow. Container oriented teams.

Pricing

Google Cloud resources. Pay by computation-hour and storage-month as usually. Higher pricing for AutoML instances. More about Vertex AI pricing .

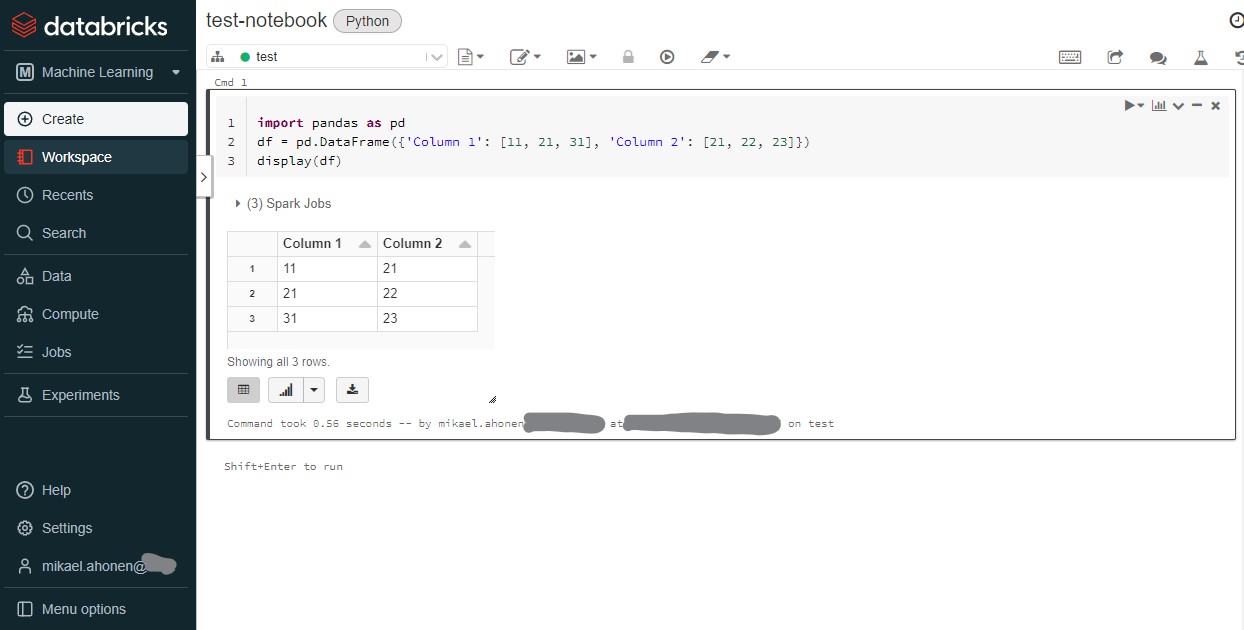

Databricks

The company likes to call its architecture as “Lakehouse” which combines… actually pretty much everything from data processing to analytics.

Databricks is created by the team that has invented the distributed computation framework Spark. It is no surprise that Spark is also integrated seamlessly to Databricks platform.

Databricks has been founded on 2013.

Pros

Overall the platform is relatively intuitive to use. Because it is cloud agnostic, it is pushing towards vendor tools less.

However, Databricks is a significant open source contributor in frameworks such as delta lake (a storage format) and MlFLow (ML management tool).

Databricks might be the most comprehensive platform from these all. It is relatively common that data engineer teams routinely use the platform to build ETL pipelines.

It is convenient that notebooks can be directly scheduled without extra effort.

Cons

You have only notebooks for the code. There is no easy way to create plain .py files or libraries in the Databricks environment.

Databricks is not the strongest candidate for people who are accommodated to work with containers.

The platform has so many use cases that productive usage among all stakeholders might take some practice.

Target users

Large and demanding data teams including also data engineers who need robust environment for co-operation.

Pricing

Other cloud companies benefit financially by providing the basic service for the cost of the consumed resources. Databricks needs to have something extra to make their profit.

That is why they are charging from DBUs (DataBricks Units) per hour on top of other resources. DBU is nothing but surcharge for Databricks platform.

Summary from the ML platform comparisons

You could say that the ML platforms from big cloud companies have not been around for long. The initial releases are around 5 years old and there has been major remakes during the last 3 years.

Each of the ML platforms were easy to test without causing huge bill for my personal cloud accounts. Any team can get start testing by only tens of euros per month.

With a quick glance, it is difficult to find huge differences between the ML platforms of the big cloud companies. If one feature is missing, it usually easy replace by another library or cloud component.

Also, there are multiple features and ways of doing the same thing. You feel like drowning to information flood. It is always better to find tools for a problem rather than a problem for the tools.

The offerings are evolving constantly, so I would keep monitoring the future plans of the products. Especially Google seems to have good speed with their cloud products.

All of the companies make business by packaging open source tools to commercialized product. But be cautious. For example Databricks loudly promotes their open source frameworks and libraries on their ML platform. Open source is not a guarantee of universal compatibility, but simply a promise of free availability.

It feels that AWS, Microsoft and Google just try to fulfil the minimum requirements with the ML platforms to keep the customers using their cloud environment. For them, it might be enough to have a tie in the game.

Updates on this post

| Date | Update |

|---|---|

| 2023-01-06 | No Google experience -> Google Certified. Update Vertex AI section. Switch Vertex AI and Databricks in ranking. |

Write a new comment

The name will be visible. Email will not be published. More about privacy.