Go to Spark + Python tutorial in AWS Glue in Solita’s data blog.

Spark and parallel computing

A shop cashier can only serve a limited amount of customers at a given time. Tight focus and previous experience can enhance their performance to some extent. A better approach to increase the throughtput is to have more employees.

Parallel computation works with the same core idea. Instead of having a single super computer it might be smarter to have multiple regular computation units to operate in the same task simultaneously.

Spark is an open source framework for parallel computation from Apache foundation.

Spark could be described as an computation environment which is operated by a selected programming language. Python language is a common companion for Spark thanks to Python’s diversity, wide distribution and easy syntax.

Why to run Spark application in a cloud platform?

Spark is designed for big data. Even though you can install Spark on a laptop, the memory, disk space and processing power would become soon as the limiting factor. In the real use cases Spark is often ran on an cloud platforms for which Amazon’s Glue service offers a “low” level option.

Setting up a scalable parallel computation cluster is not a breeze. AWS Glue provides a turnkey server environment so that the data developer just writes the Spark code.

Here you find an introduction to AWS Glue.

A summary about the Spark + Python tutorial

The tutorial covers these topics:

- Create a data source for AWS Glue

- Crawl the data source to the data catalog

- The crawled metadata in Glue tables

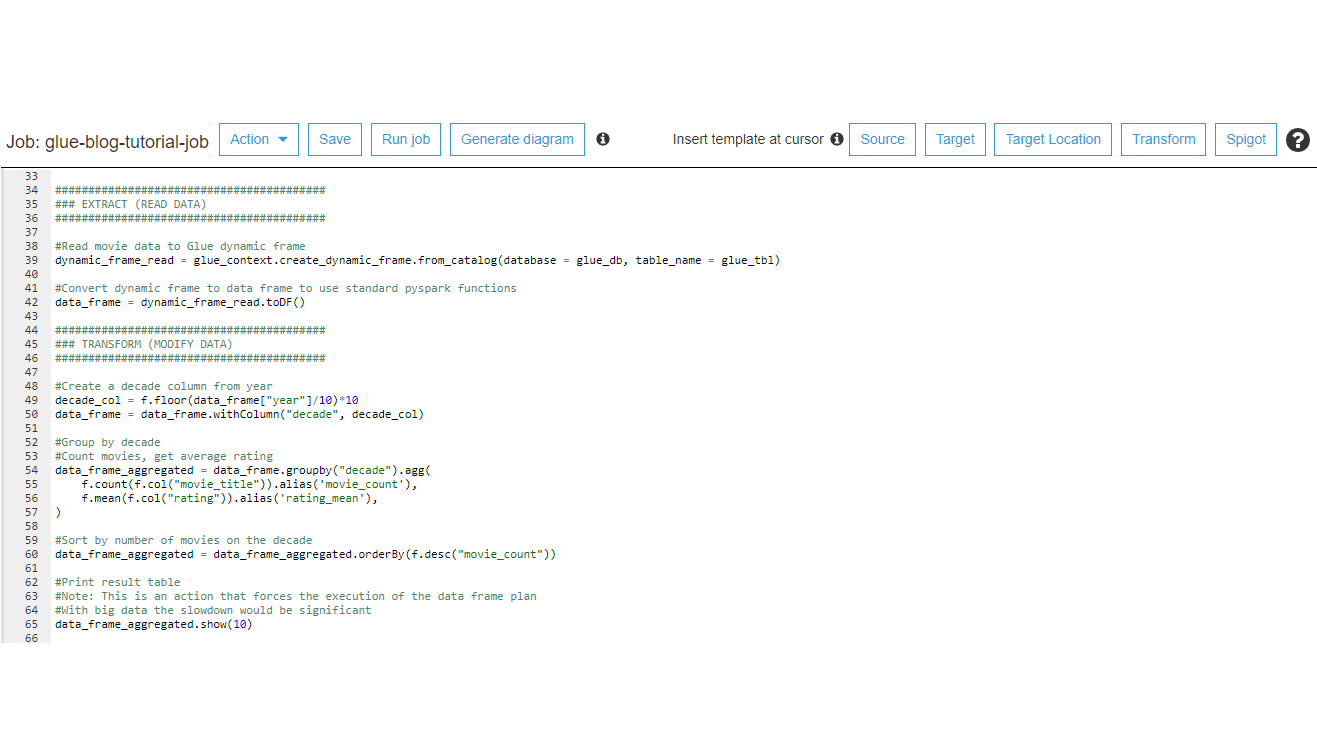

- AWS Glue jobs for data transformations

- Editing the Glue script to transform the data with Python and Spark

- Speeding up Spark development with Glue dev endpoint

- About Glue performance

- Summary about the Glue tutorial with Python and Spark

Write a new comment

The name will be visible. Email will not be published. More about privacy.